‘The task consists of labeling the MNIST testing dataset’. That’s what we’ve been told at the Machine Learning lecture concerning the newest programming task. And it left us all petrified (kind of 😀 ). In groups of two we left the lecture and started with that interesting task.

We decided to implement the handwritten digit recognition using a Naive Bayes classifier. So why Naive Bayes and not Convolutional Nets? As ‘ML noobs’ we wanted to keep it simple and we also wanted to write our own code (in python with matplotlib). Besides, the whole theory behind Naive Bayes classifier is really fascinating: Assuming that the value of a particular feature is unrelated to the presence or absence of any other feature, given the class variable – which is actually never true- and still getting correct solutions.

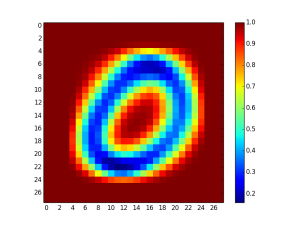

As we all know we have to use the Bayes rule. Our prior for each class = (number of samples in the class) / (total number of samples). As likelihood we simply took the number of black pixels in each class; or rather the probability that the pixel is black. We also added ‘virtual’ counts for numerical stability for the case that the count is equal to zero (e.g. probability that pixel 12 is black given that it’s in class ‘2’ = 0,01 when it’s actually white). We got some nice heat maps, like this one for zero:

After training our classifier we proceeded with the test set for recognition. Therefor we used the Log-likelihood ratio because it makes everything much easier since it transforms a product into a sum. Unlike the classic approach we chose the smallest probability as the right classification. That’s simply because the algorithm summed up negative results. The normal inductive bias whould be to choose the outcome with the highest probability.

Here our results in a nutshell:

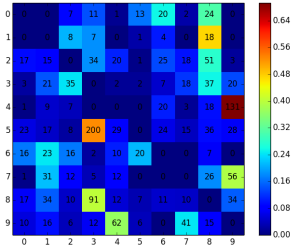

Classic Naive Bayes Approach

First we started with the classic Naive Bayes classifier. Which means that we had one classifier training 10 classes (0-9).

On the right you can see its confusion matrix. The x-axis represents the real class and the y-axis the predicted class. As you can see it had a huge problem differentiating between 4 and 9 and also between 3 and 5.

The accuracy rate was 83,55% (the best one)

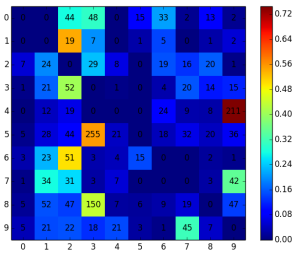

Naive Bayes with Bagging

We tried to improve our accuracy rate with bagging, a well known regularization technique.

So we had five Bayes classifiers distinguishing two classes:

(0,1), (2,5), (3,4), (6,9) and (7,8)

Each classifier had an accuracy rate of at least 93%.

But the whole model’s accuracy rate was 81,06%.

I think our results weren’t so bad. We also tried it with subsampling the images. Which was a crap idea. I don’t know why a paper we found on the internet suggested it. Maybe we should have used boosting instead of bagging, since in boosting classifiers ain’t independent from each other.

Fact is: we learnt a lot from this programming task an we also had lots of fun.

You can find the MNIST database here. And if you have some ideas or maybe got better results using Naive Bayes please don’t hesitate and let me know 🙂

Hi. Hope you’re doing well.

Very nice work! I’m learning ML on my own and I want to practice logistic regression and naive bayes with the MNIST data set. I was wandering if you would like to help me in my journey to AI.

I’m so sorry. I just read your message since I stopped writing on my blog 😦 and I didn’t get a notification somehow. I hope you’re doing well!